The BabyView Project

The BabyView Project is a research initiative dedicated to capturing children’s everyday experiences via high-resolution videos taken from the infant perspective, and to using the resulting video data to better understand cognitive development. Our dataset is currently the largest open video dataset of children’s everyday experience to date, both in terms of the number of hours and the diversity of the participating families; data collection is currently ongoing.

You can find information on our current publications, how to access the releases of the dataset, and the specifics about the build of the BabyView camera.

Data Snapshot

Funding

The BabyView project acknowledges generous support from:

- The Stanford Human-Centered AI Initiative (HAI) Hoffman-Yee grant program

- Schmidt Futures

- Meta, Inc.

- The Stanford Center for the Study of Language and Information John Crosby Olney Fund

- Amazon, Inc.

- Compute support from the Microsoft Accelerating Foundation Models Research (AFMR) program

- NIH K99HD108386 to BLL

Selected Publications

-

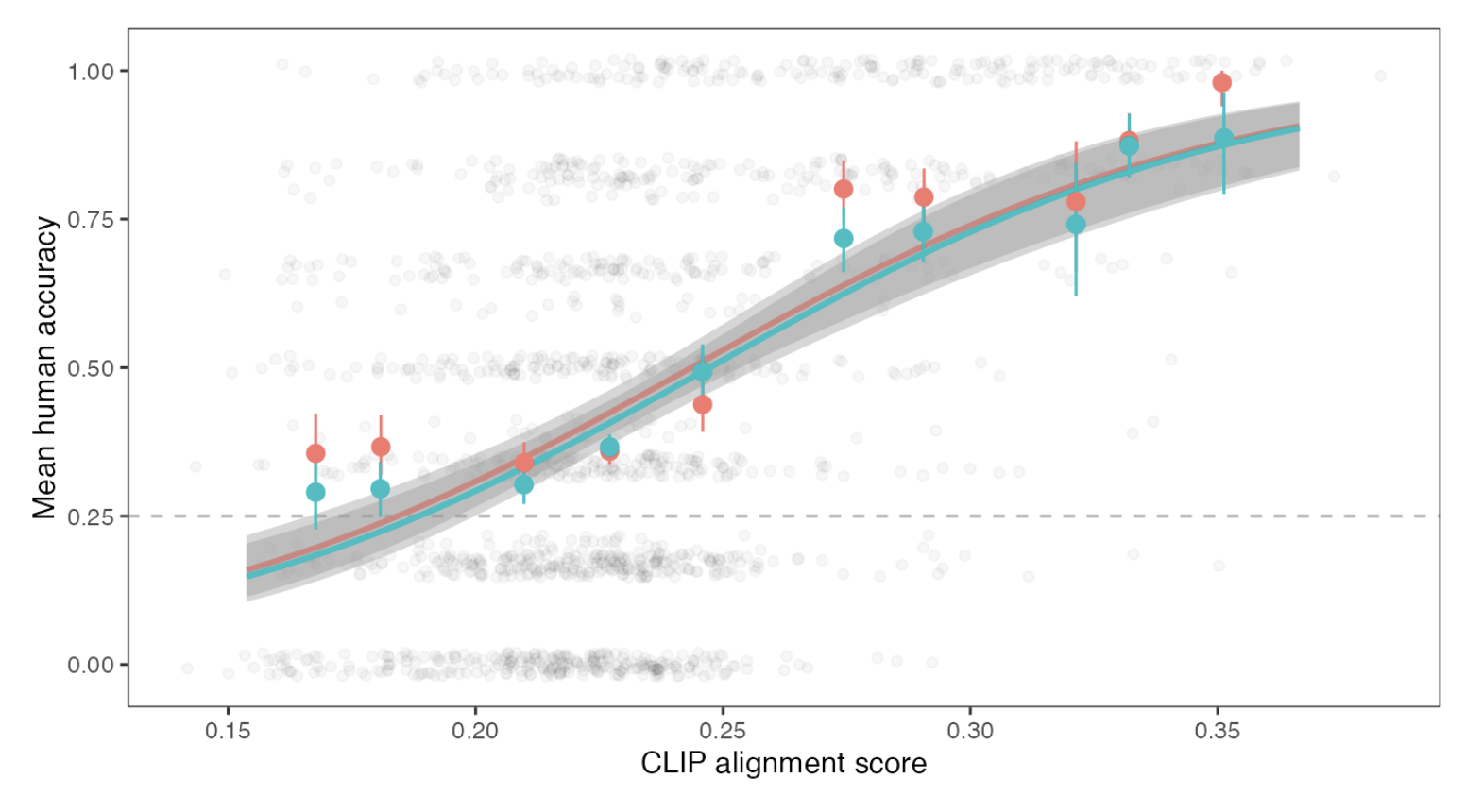

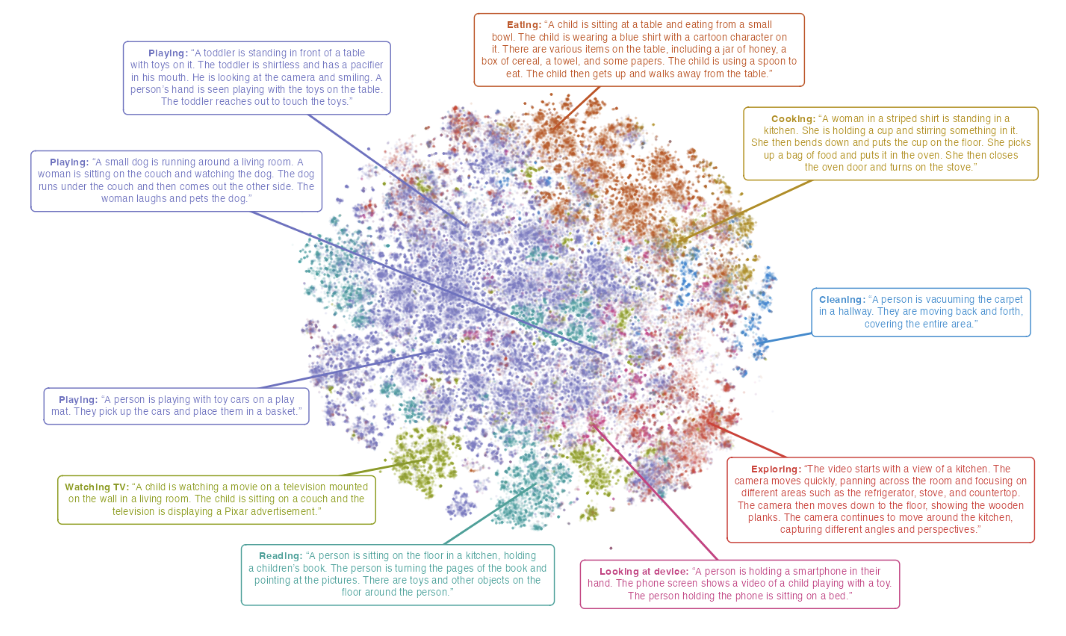

Assessing the alignment between infants’ visual and linguistic experience using multimodal language modelsarXiv preprint arXiv:2511.18824, 2025

Assessing the alignment between infants’ visual and linguistic experience using multimodal language modelsarXiv preprint arXiv:2511.18824, 2025 -

-

The BabyView dataset: High-resolution egocentric videos of infants’ and young children’s everyday experiencesIn Proceedings of Cognitive Computational Neuroscience Conference (8 page track), 2025

The BabyView dataset: High-resolution egocentric videos of infants’ and young children’s everyday experiencesIn Proceedings of Cognitive Computational Neuroscience Conference (8 page track), 2025